Installing autoware + docker (cont'd)

This is a continuation of Installing Autoware on Ubuntu 20.04.

I'm starting over after working with the system76 support engineers to address some issues I ran into. The tl;dr is that I ended up having a mix of packages from the system76 apt repositories and the nvidia apt repositories, and these packages didn't play well together. I removed all of the system76 packages and re-installed exclusively from the nvidia apt repositories.

The current state of my drivers:

# nvidia-smi

Mon Oct 31 12:52:32 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 515.65.07 Driver Version: 515.65.07 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... On | 00000000:01:00.0 Off | N/A |

| N/A 41C P8 5W / N/A | 168MiB / 8192MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1393 G /usr/lib/xorg/Xorg 32MiB |

| 0 N/A N/A 2203 G /usr/lib/xorg/Xorg 4MiB |

| 0 N/A N/A 4500 C /usr/NX/bin/nxnode.bin 126MiB |

+-----------------------------------------------------------------------------+

Nvidia packages

# dpkg -l | grep nvidia-

ii libnvidia-cfg1-515:amd64 515.65.07-0ubuntu1 amd64 NVIDIA binary OpenGL/GLX configuration library

ii libnvidia-common-515 515.65.07-0ubuntu1 all Shared files used by the NVIDIA libraries

ii libnvidia-compute-515:amd64 515.65.07-0ubuntu1 amd64 NVIDIA libcompute package

ii libnvidia-compute-515:i386 515.65.07-0ubuntu1 i386 NVIDIA libcompute package

ii libnvidia-decode-515:amd64 515.65.07-0ubuntu1 amd64 NVIDIA Video Decoding runtime libraries

ii libnvidia-decode-515:i386 515.65.07-0ubuntu1 i386 NVIDIA Video Decoding runtime libraries

ii libnvidia-encode-515:amd64 515.65.07-0ubuntu1 amd64 NVENC Video Encoding runtime library

ii libnvidia-encode-515:i386 515.65.07-0ubuntu1 i386 NVENC Video Encoding runtime library

ii libnvidia-extra-515:amd64 515.65.07-0ubuntu1 amd64 Extra libraries for the NVIDIA driver

ii libnvidia-fbc1-515:amd64 515.65.07-0ubuntu1 amd64 NVIDIA OpenGL-based Framebuffer Capture runtime library

ii libnvidia-gl-515:amd64 515.65.07-0ubuntu1 amd64 NVIDIA OpenGL/GLX/EGL/GLES GLVND libraries and Vulkan ICD

ii nvidia-compute-utils-515 515.65.07-0ubuntu1 amd64 NVIDIA compute utilities

ii nvidia-dkms-515 515.65.07-0ubuntu1 amd64 NVIDIA DKMS package

ii nvidia-driver-515 515.65.07-0ubuntu1 amd64 NVIDIA driver metapackage

ii nvidia-kernel-common-515 515.65.07-0ubuntu1 amd64 Shared files used with the kernel module

ii nvidia-kernel-source-515 515.65.07-0ubuntu1 amd64 NVIDIA kernel source package

ii nvidia-settings 520.61.05-0ubuntu1 amd64 Tool for configuring the NVIDIA graphics driver

ii nvidia-utils-515 515.65.07-0ubuntu1 amd64 NVIDIA driver support binaries

ii screen-resolution-extra 0.18build1 all Extension for the nvidia-settings control panel

ii xserver-xorg-video-nvidia-515 515.65.07-0ubuntu1 amd64 NVIDIA binary Xorg driver

Cuda packages

# dpkg -l | grep cuda-

ii cuda-repo-ubuntu2004-11-6-local 11.6.0-510.39.01-1 amd64 cuda repository configuration files

ii cuda-toolkit-11-6-config-common 11.6.55-1 all Common config package for CUDA Toolkit 11.6.

ii cuda-toolkit-11-8-config-common 11.8.89-1 all Common config package for CUDA Toolkit 11.8.

ii cuda-toolkit-11-config-common 11.8.89-1 all Common config package for CUDA Toolkit 11.

ii cuda-toolkit-config-common 11.8.89-1 all Common config package for CUDA Toolkit.

Re-install cuda 11-6

Based on these instructions with a slight modification:

$ cuda_version=11-6

$ apt install cuda-${cuda_version} --no-install-recommendsand ignoring errors like W: Sources disagree on hashes for supposely identical version '11.6.55-1' of 'cuda-cudart-11-6:amd64'. which I haven't tracked down yet.

After upgrading:

$ nvidia-smi

Mon Oct 31 15:52:57 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 520.61.05 Driver Version: 520.61.05 CUDA Version: 11.8 |

|-------------------------------+----------------------+----------------------+Start autoware container

$ rocker --nvidia --x11 --user --volume $HOME/Development/autoware --volume $HOME/Development/autoware_map -- ghcr.io/autowarefoundation/autoware-universe:latest-cuda

...

docker: Error response from daemon: could not select device driver "" with capabilities: [[gpu]].

As documented here, this error happens because the nvidia-container-toolkit is missing. To fix this:

$ sudo apt-get update && sudo apt-get install -y nvidia-container-toolkitI'm still getting the same error, so now am re-installing the nvidia-docker2 package that I had purged earlier, based on these instructions:

distribution=$(. /etc/os-release;echo $ID$VERSION_ID) \

&& curl -s -L https://nvidia.github.io/libnvidia-container/gpgkey | sudo apt-key add - \

&& curl -s -L https://nvidia.github.io/libnvidia-container/$distribution/libnvidia-container.list | sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

sudo apt-get update

sudo apt-get install -y nvidia-docker2

sudo systemctl restart dockerAt this point re-running the rocker command work, and it dropped me into the container.

Hitting rviz2 errors

I'm hitting the same errors described in this rviz2 discussion:

$ rviz2

QStandardPaths: XDG_RUNTIME_DIR not set, defaulting to '/tmp/runtime-tleyden'

libGL error: MESA-LOADER: failed to retrieve device information

libGL error: MESA-LOADER: failed to retrieve device information

[ERROR] [1666389050.804997231] [rviz2]: RenderingAPIException: OpenGL 1.5 is not supported in GLRenderSystem::initialiseContext at /tmp/binarydeb/ros-galactic-rviz-ogre-vendor-8.5.1/.obj-x86_64-linux-gnu/ogre-v1.12.1-prefix/src/ogre-v1.12.1/RenderSystems/GL/src/OgreGLRenderSystem.cpp (line 1201)Use system76 version of nvidia prime-select

There is a thing called nvidia prime-select which essentially controls whether GUI apps will default to running on the nvidia graphics card or using the built-in graphics card in the CPU.

With System76 machines however, they have their own version of nvidia prime-select that supports the following modes (details here):

nvidia- use the nvidia GPU graphics card for everythingintegrated- use the CPU integrated graphics card for everythinghybrid- use the integrated CPU graphics card for most things, but allow an override mechanism to run things on the nvidia GPU.

I have not been able to figure out how to use hybrid mode and use the override environment variables to force apps onto the GPU from within the container, but I was able to brute force it by switching from hybrid to nvidia mode.

system76-power graphics nvidia

<reboot>

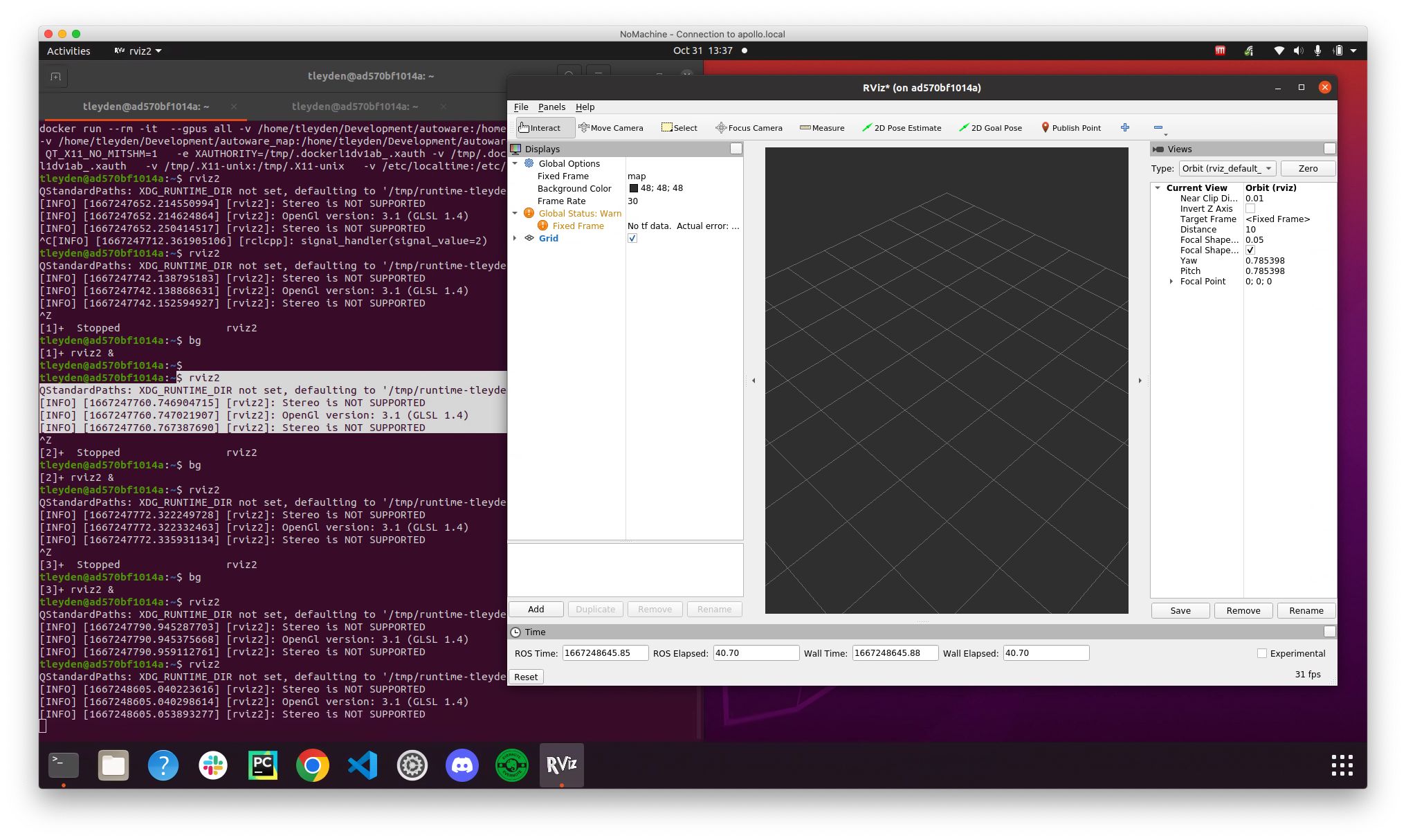

Rviz2 running on the GPU

Now when I start the autoware container and run rviz2 it no longer shows any errors:

$ rviz2

QStandardPaths: XDG_RUNTIME_DIR not set, defaulting to '/tmp/runtime-tleyden'

[INFO] [1667247760.746904715] [rviz2]: Stereo is NOT SUPPORTED

[INFO] [1667247760.747021907] [rviz2]: OpenGl version: 3.1 (GLSL 1.4)

[INFO] [1667247760.767387690] [rviz2]: Stereo is NOT SUPPORTED

Unfortunately it's not straightforward to verify it with nvidia-smi, which does not seem to be able to show processes running in containers. I was however able to indirectly verify it by two ways:

- In my experience, the only way

rviz2would even start from a docker container and run on the integrated cpu graphics card would be to pass in /dev/dri as a container argument. Without this argument, it must be running on the GPU. - I started 5

rviz2processes and verified withnvidia-smithat the amount of GPU memory available was steadily decreasing each time I started another rviz2 process.

Future work: making it work in hybrid mode

The problem with running everything on the GPU is that it uses up precious GPU memory, even for things that don't need to be on the GPU.

From my experience so far, the normal approach to forcing things to run on the GPU does not work in docker containers, but I'm hoping someone will answer that on the nvidia forum to provide more details.

In the meantime, at least the system76-power graphics nvidia mode worked, and I would guess that using nvidia prime-select to set the default to the GPU would also work.